Autonomous Targeting Vision System

Computer vision system and hardware design

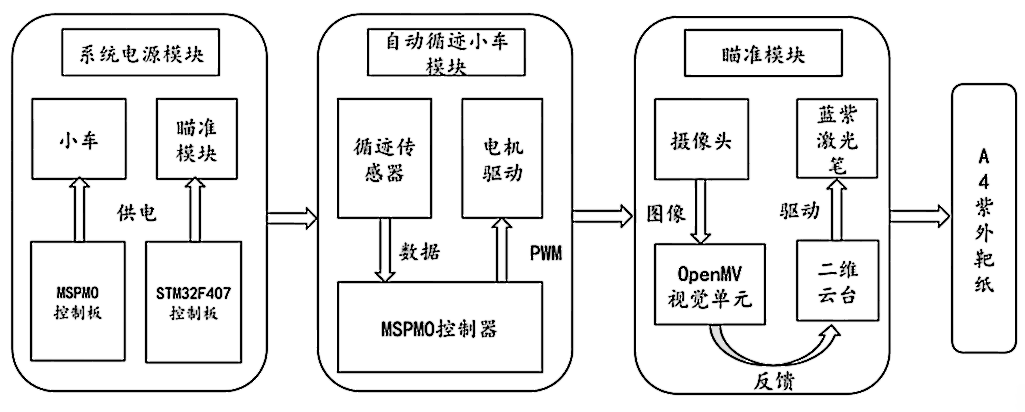

Overview

A comprehensive autonomous targeting system integrating computer vision, embedded control, and precision mechanics. This project demonstrates the application of classical computer vision algorithms with modern embedded platforms to achieve real-time target detection and trajectory control. Built upon my foundation in STM32 development (Keil5, HAL library) gained during freshman year and further enhanced through dedicated study, the system showcases practical implementation of multi-module communication protocols and perspective-adaptive algorithms.

Technical Achievements: Sub-centimeter targeting accuracy, real-time circle generation with <1/4 cycle synchronization error, and robust UART communication protocol with <30ms latency.

System Demonstration

Technical Architecture

Hardware Selection & Comparison

OpenMV H7

✅ Rapid prototyping

✅ Compact size

✅ MicroPython

K230

⚡ High AI performance

⚖️ Moderate size

🔧 Complex development

Jetson Nano

🚀 Mature ecosystem

❌ Large footprint

⏰ Long dev cycle

Core Technologies

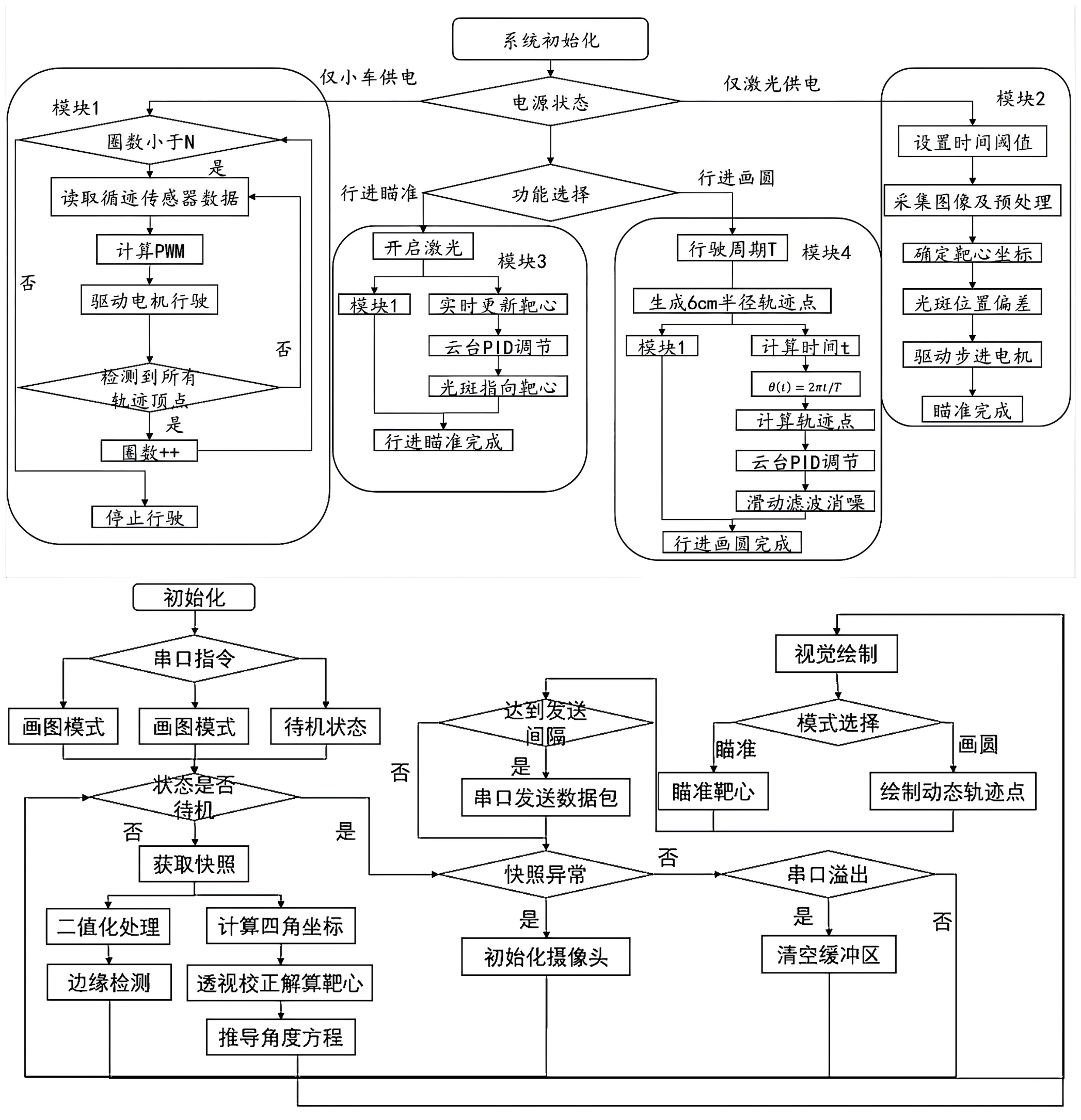

1. Perspective-Adaptive Target Detection

- Classical Computer Vision: Blob detection with multi-threshold filtering

- Edge Boundary Checking: Robust false-positive elimination

- Diagonal Intersection Method: Precise center calculation under perspective distortion

2. Real-time Circle Generation Algorithm

- Perspective Compensation: Dynamic ellipse parameter calculation

- Synchronization Control: Vehicle motion and drawing trajectory matching

- Smooth Interpolation: Angular velocity coordination for seamless operation

3. Multi-Module Communication Protocol

- UART-based: Custom frame protocol with error detection

- Real-time Coordination: <30ms latency between vision and control

- Robust Data Transfer: Frame validation and recovery mechanisms

Performance Metrics

| Metric | Requirement | Achieved | Status |

|---|---|---|---|

| Targeting Accuracy | < 2cm | < 1.5cm | ✓ |

| Circle Radius Precision | 6cm ± 0.5cm | 6cm ± 0.3cm | ✓ |

| Synchronization Error | < 1/2 cycle | < 1/4 cycle | ✓ |

| Processing Latency | < 50ms | < 30ms | ✓ |

Implementation Details

Vision Processing Module

# Core blob detection with multi-criteria filtering

def find_target_boundary_rect(img):

blobs = img.find_blobs([BLACK_THRESHOLD],

pixels_threshold=min_pixels,

area_threshold=min_area)

# Edge boundary validation

valid_blobs = filter_edge_touching_blobs(blobs)

# Perspective center calculation

return calculate_diagonal_intersection(valid_blobs)

Communication Protocol

# Custom UART frame structure

def send_coordinates(x, y):

frame = struct.pack('<BBBBBB',

0x3C, 0x3B, # Header

x, y, # Coordinates

0x01, 0x01) # Footer

uart.write(frame)

Results & Recognition

This project was successfully implemented and tested, achieving all target specifications. The system was later applied in the 2025 National College Student Electronic Design Contest, earning Provincial First Prize.

Key Contributions:

- Designed and implemented complete vision processing system

- Achieved sub-centimeter targeting accuracy under various conditions

- Developed novel perspective-adaptive circle generation algorithm

- Established robust real-time communication between vision and control modules

Technical Stack

Hardware

- OpenMV Cam H7 + Variable Focus Lens

- STM32 Main Controller (Keil5, HAL Library)

- MSPM0 Motor Controller

- High-precision Servo Motors

Software

- MicroPython (OpenMV)

- Classical Computer Vision

- Real-time Control Algorithms

- Custom Communication Protocols

This project demonstrates the successful integration of computer vision, embedded systems, and precision control to solve complex real-world targeting challenges, showcasing practical application of theoretical knowledge in embedded system design.